As mentioned in my last article, I never managed to get the neural net “AI” image creators to generate anything even approximating what I was asking for, let alone useful to the project. The only thing I got out of the effort was my inbox flooded with various messages from the scummy “AI” image creation companies I had signed up for trial accounts with. One company, NightCafe, had the audacity to email me every single time “someone” wrote a message under one of my creations. I put “someone” in quotation marks because, as should be expected, I’m pretty sure most of these were generic bot comments, which mysteriously deleted themselves after a short while.

Having said that, the bots seemed realistic enough that I actually found myself hoodwinked into responding to them as if they were real people. Makes me wonder how many comments I’ve responded to online that were relatively sophisticated bots.

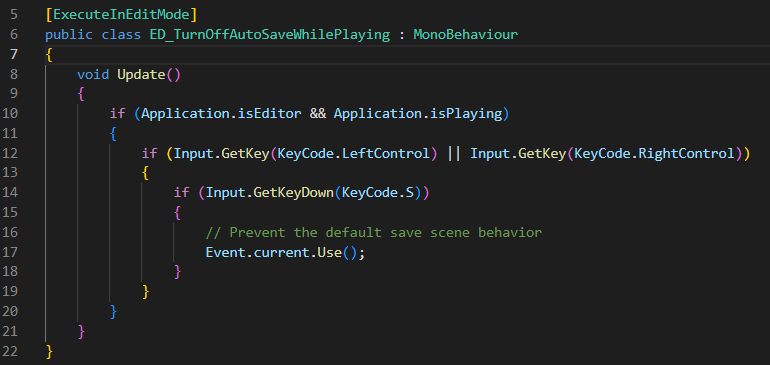

“AI” appears to have nailed the ability to imitate a generic idiot online. Everything else is more hit and miss, such as this AI answer to a simple coding question that is complete nonsense.

To be fair, I have also gotten relatively useful AI coding answers. If I am asking a simple question where there exists good documentation, the AIs tend to spit out a decent answer. However, even when they give wacky, insano-world answers they do so with such confidence that you can be lulled into not understanding that these are mathematical models with no real intellect. That’s part of the reason why I wrote that first article. The easiest way to pump the brakes on the neural net hype is to show their visual failures, which are both hilarious and undeniable.

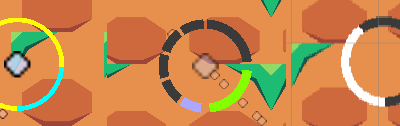

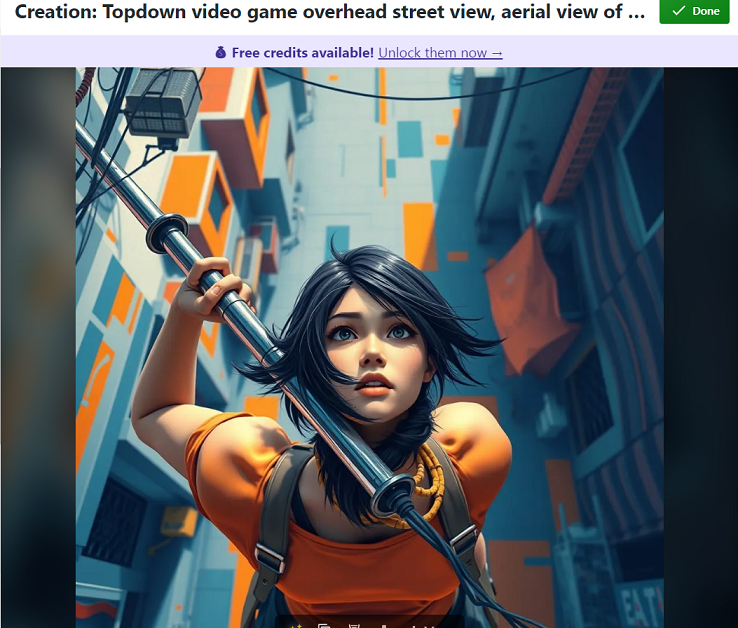

After that piece I got help from two readers. “Dutchy” offered to attempt to make worthwhile prototype images on my behalf. I was impressed that he managed wrangle the AI into producing an actual spritesheet of characters.

He also managed to get them to produce a transparent background, which would save me a ton of time editing the images myself. Unfortunately, while he did better, he just couldn’t get the true top down perspective that I really wanted. The final art for the game may well be a more traditional part-side isometric style, but that makes for a lot more work for me in the short term, and these are supposed to be quick throwaway assets that will be replaced anyway.

Having said all that, I’m trying to be objective about this technology, and I have to admit that the AIs do churn out very appealing images, far superior to anything I could create, and far faster. Granted, it’s arguable that they achieve this essentially through plagiarization of human artist work, and many of the images have weird graphical problems if you look closely. Still, I can’t pretend not to be impressed by this collection of images that Dutchy produced for me.

On the topic of AI image generation, I got another comment from “Unelectable,” that I want to reproduce here.

Hello TDC. I’ve been battling with AI for over a year now, and so I know your pain exquisitely well. I’ll help get you started on a more controlled and cost-efficient set of tools and techniques, and if any of it proves useful maybe you can help me with some game development in turn.

Of course. Just email me.

First of all, paying for AI generation is not going to serve you as well long-term as will getting into the guts of the thing. The common AI art makers online are using their own training models and internal prompt-controls to fine-tune the outputs so ye olde average normie can produce ooo’s and ahh’s more generally. While I’ve seen good results, it will likely be an expensive form of education that prevents you from making your own model modifications and choices that would, for instance, build a pixel-art that knows top-down perspective design well enough for your uses.

Therefore, I’d recommend you go for StableDiffusion via the tool above. I use Automatic1111. There are other UI variations like ComfyUI, but in this world of AI generations it is best to just pick one of the million variants and just go with it. Installation was easy enough even for a programming-pleb like myself, and the introductory tutorial should help get you familiar with the possibilities.

…

One of the main reasons I suggest StableDiffusion, aside from it being free and locally controlled without guardrails, is because of this website CivitAI. People have created thousands of models trained on specific kinds of art, so that the machine is going to produce art mainly from that kind of style rather than mixing it up with incompatible stuff. This website is invaluable for sourcing these models. Signup and use is free.

Unfortunately, I am on Windows 7, so installing Stable Diffusion, while not impossible, has been something of an uphill battle. Having said that, I’ve definitely come to see the necessity of in house training of one of these models. That’s a bit disappointing, since the dream was to quickly churn out prototype image assets, but these “AI” image creators are simply too stupid to generate correct images out of the box. In any case, it’s not my number one priority right now, overhauling the combat is.

Imma give you a hard demand for once, the combat lacks pacing, you have normie speed and then slow down when firing, this is ok and adds tactical layer. But a simple 3 second generation dash can spice this game up a lot. Dashing through a gap is essential of any such a game.

– Dutchy

Speaking of, Dutchy also left the above comment on another article. I happen to wholeheartedly agree. Originally, the game was far more complex, with many different guns and even a slide ability. The response I got from some playtesters indicated that they didn’t understand basic things about how the game worked. For example, one of the playtesters thought he’d found a bug, where the gun randomly stopped working. In reality, the gun had an overheating system, and it had simply overheated. I don’t blame the tester for thinking the game was broken, as the audio and visual feedback was so poor that none of this was properly communicated to the player.

I also figured that the game was largely satirical, so it didn’t need to be that complicated. However, the correction to a much simpler game I think overall hurts the experience. There are lots of interesting ways to add depth to the experience that require adding additional tools into the player’s toolbox. That will make the game something of a mess for a good long while, but I’ve come to believe that this is simply necessary in the long term.

As one of many examples, I did add something approximating a dash, but that I believe is more interesting. Meleeing the enemies builds up charge, and when this gets high enough, the player can throw their stick at the enemies. This does damage in a line, but more importantly, when it lands in the ground it stays there, preventing the player from melee’ing anything else. In exchange, the player can instantly teleport back to the stick at their leisure.

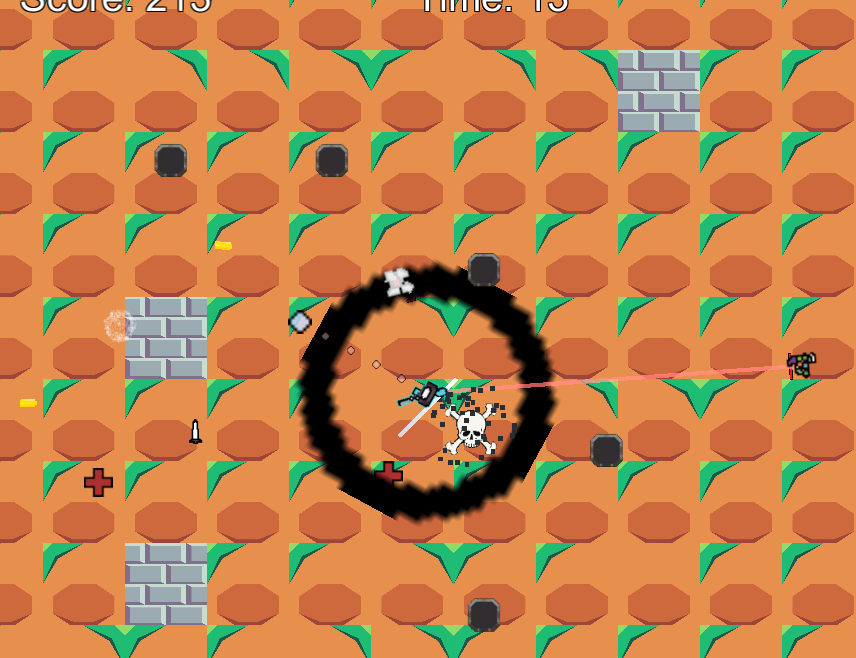

I also added a wave that is generated post-teleport, because it was initially too good to simply teleport far away from danger, and I wanted to incentivize more aggressive and interesting gameplay. As you can see, graphically it is not exactly a finished effect. Likewise, while there is a simple bar that shows the charge above the minimap to the right, I do want this effect to be obvious while looking directly at your avatar. That may involve creating the stick as a separate graphical asset and having it glow as charge accumulates, or something else of that nature.

Also, I might scrap this entire idea, and just add a regular dash. Or go with something else similar.

I’ve also added the classic sniper and shotgun, a stationary turret, and an expanding orb alt fire for the main gun that is somewhat hard to explain without seeing it in motion. It’s just not worth it to me to make a video right now, instead of one in a few days when all this has been implemented at least to a moderate degree, so we can see what works and what doesn’t.